- Digging into stories of 1980s operating systems

- Retrocomputing is a substantial, and still growing, interest

- The myth that early computers were simple

- How we got here

- The NewtonOS also had no file system

The War for Workstations and the MIT and New Jersey Schools

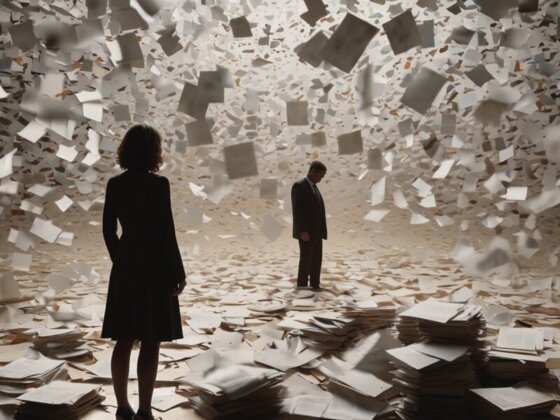

Digging into stories of 1980s operating systems, a forgotten war for the future of computing emerges. It was won by the lowest bidders, and then the poor users and programmers forgot it ever happened.

Retrocomputing is a substantial, and still growing, interest for many techies and hobbyists, and has been for a decade or two now. There’s only so much you can write about a game that’s decades old, but operating systems and the different paths they’ve taken still have rich veins to be explored.

Anyone who played with 1980s computers remembers the and other battles. But digging down past the stratum of cheap home computers and gaming reveals bigger, more profound differences. The winners of the battles got to write the histories, as they always do, and that means that what is now received wisdom, shared and understood by almost everyone, contains and conceals propaganda and dogma. Things that were once just marketing BS are now holy writ, and when you discover how the other side saw it, dogma is uncovered as just big fat lies.

The biggest lie

The first of the big lies is the biggest, but it’s also one of the simplest, one that you’ve probably never questioned.

It’s this: Computers today are better than they have ever been before. Not just that they have thousands of times more storage and more speed, but that everything, the whole stack – hardware, operating systems, networking, programming languages and libraries and apps – are better than ever.

The myth is that early computers were simple, and they were replaced by better ones that could do more. Gradually, they evolved, getting more sophisticated and more capable, until now, we have multi-processor multi-gigabyte supercomputers in our pockets.

Which gives me an excuse to use my favorite German quote, generally attributed to physicist Wolfgang Pauli: „Das ist nicht nur nicht richtig; es ist nicht einmal falsch!“ (That is not only not right, it is not even wrong!)

Well, that is where we are today.

Evolution

The first computers, of course, were huge room-sized things that cost millions. They evolved into mainframes: very big, very expensive, but a whole company could share one, running batch jobs submitted on punched cards and stuff like that. After a couple of decades, mainframes were replaced by minicomputers, shrunk to the size of filing cabinets, but cheap enough for a mere department to afford. They were also fast enough that multiple users could use them at the same time, using interactive terminals. All the mainframes‘ intelligent peripherals, networked to their CPUs, and their sophisticated, hypervisor-based, operating systems, with rich role-based security just thrown away.

Then, it gets more complicated. The conventional story, for those who have looked back to the 1970s, is that microcomputers came along, based on cheap single-chip microprocessors, and swept away minicomputers. Then they gradually evolved until they caught up. But that’s not really true.

First, and less visibly because they were so expensive, department-scale minicomputers shrank down to desk-sized, and then desk-side, and later desk-top, workstations. Instead of being shared by a department, these were single-user machines. Very powerful, very expensive, but just about affordable for one person – as long as they were someone important enough.

Meanwhile, down at the budget end and at the same time as these tens-of-thousands-of-dollar single-user workstations, dumb terminals evolved into microcomputers. Every computer in the world today is, at heart, a „micro.“

At first, they were feeble. They could hardly do anything. So, this time around, we lost tons of stuff.

How we got here

Those early-1980s micros, the weakest, feeblest, most pathetic computers since the first 1940s mainframes, the early eight-bit micros, those are the ancestors of the computers you use today.

In fact, of all the early 1980s computers, the one with the most boring, unoriginal design, the one with no graphics and no sound – that, with a couple of exceptions, is the ancestor of what you use today. , which got expanded and enhanced over and over again to catch up and eventually, over about 15 years, exceed the abilities of its cheaper but cleverer rivals.

It is that when you try to replace features that you eliminated, the result is never as good as if you designed it in at the beginning.

We run the much-upgraded descendants of the simplest, stupidest, and most boring computer that anyone could get to market. They were the easiest to build and to get working. Easy means cheap, and cheap sells more and makes more profit. So they are the ones that won.

There’s a proverb about choosing a product: „You can have good, fast, and cheap. Choose any two!“

We got „fast“ and „cheap.“ We lost „good“, replaced by „reliable,“ which is definitely a virtue, but one that comes with an extremely high price.

What we lost

Like many a middle-aged geek, there was a time when I collected 1980s hardware, because what was inaccessible when it was new – because I couldn’t afford it – was being given away for free. However, it got bulky and I gave most of it away, focusing on battery-powered portable kit instead, partly because it’s interesting in its own way, and partly because it doesn’t take up much room. You don’t need to keep big screens and keyboards around.

That led me to an interesting machine: the other line of Apple computers, the ones that Steve Jobs had no hand in at all.

The machine that inspired Jobs, which led to the Lisa and then the Mac, was of course , a $30,000 deskside workstation. In Jobs‘ own words, he saw three amazing technologies that day, but he was so dazzled by one of them that he missed the other two. He was so impressed by the GUI that he missed the object-oriented graphical programming language, Smalltalk, and the Alto’s . The Lisa had none of that. The Mac had less. Apple spent much of the next 20 years trying to put them back. In that time, Steve Jobs hired John Sculley from PepsiCo to run Apple, and in return, Sculley fired Jobs. What Apple came up with during Sculley’s reign was .

I have two Newtons, an Original MessagePad and a 2100. I love them. They’re a vision of a different future. But I never used them much: , which had a far simpler and less ambitious design, meaning that they were cheaper but did the job. This should be an industry proverb.

The Newton that shipped was a pale shadow of the Newton that Apple originally planned. There are traces of that machine out there, though, and that’s what led to me uncovering the great computing war. The Newton was – indeed, still is – a radical machine. It’s designed to live in your pocket, store and track your information and habits. It had an address book, a diary, a note-taking app, astonishing handwriting recognition, and a primitive AI assistant. You could write „lunch with Alice“ on the screen, and it would work out what you wrote, analyze it, work out from your diary when you normally had lunch, from your call history where you took lunch most often and which Alice you contacted most often, book a time slot in your diary and send her a message to ask her if she’d like to come. It was something like Siri, but 20 years earlier, and in that time, Apple seems to have forgotten all this: It had to buy Siri in.

NewtonOS also had no file system. I don’t mean it wasn’t visible to the user; I mean there wasn’t one. It had some non-volatile memory on board, expandable via memory cards – huge PCMCIA ones the size of half-centimetre-thick credit cards – and it kept stuff in a sort of OS-integrated object database, segregated by function. The stores were called „soups“ and the OS kept track of what was stored where. No file names, no directories, nothing like that at all. Apps, and some of the OS itself, were written in a language called NewtonScript, which is very distantly related to both AppleScript on modern macOS and JavaScript. But that was not the original plan. That was for a far more radical OS, in a more radical language, one that could be developed in an astounding graphical environment. The language was called Dylan, which is short for Dynamic Language. It as a FOSS compiler. Apple seems to have forgotten about it too, because it reinvented that wheel, worse, with Swift.

Dylan is amazing, and its even more so (a few are left). Dylan is very readable, very high-level, and before the commercial realities of time and money prevailed, Apple planned to write an OS, and the apps for that OS, in Dylan.

Now that is radical: Using the same, very high-level, language for both the OS and the apps.

This is what we lost

I started digging into that, and that’s when the ground crumbled away, and I found that, like some very impressive CGI special effects, I wasn’t excavating a graveyard but a whole, hidden, ruined city.

Once, Lisp ran on the bare metal, on purpose-built computers that ran operating systems written in Lisp, ones with GUIs that could connect to the internet. The company’s dead, but the OS, OpenGenera, is still and you can on Linux. It’s the end result of several decades of totally separate evolution from the whole Mac/Windows/Unix world, so it’s kind of arcane, but it’s out there.

One of the more persuasive is from a man called Kalman Reti, the last working Symbolics engineer. So loved are these machines that people are still working on their 30-year-old hardware, and Reti maintains them. He’s made some YouTube videos demonstrating OpenGenera on Linux.

He the process of implementing the single-chip Lisp machine processors.

We took about ten to 12 man-years to do the Ivory chip, and the only comparable chip that was contemporaneous to that was the MicroVAX chip over at DEC. I knew some people that worked on that and their estimates were that it was 70 to 80 man-years to do the microVAX. That in a nutshell was the reason that the Lisp machine was great.

‚Better is the enemy of good‘

The conclusion, though, is the stinger:

In time has certainly proved Dr Gabriel correct. Systems of the New Jersey school so dominate the modern computing industry that the other way of writing software is banished to a tiny niche.

If you want to become a billionaire from software, you don’t want rockstar geniuses; you need , interchangeable and inexpensive.

Software built with tweezers and glue is not robust, no matter how many layers you add. It’s terribly fragile, and needs armies of workers constantly fixing its millions of cracks and holes.

There was once a better way, but it lost out to cold hard cash, and now, only a few historians even remember it existed.